We Sprung a Leak, Captain!

A streak of lightning flashed across the ominous sky, followed shortly by the ear-splitting boom of thunder. The ship rocked violently against the giant waves, her crew and passengers bracing themselves for dear life. The captian stood in the icy rain, hollering orders to the sailers on deck. He had grown hoarse from raising his voice, but he couldn’t rest now: too many lives were at stake. Twice he almost slipped on the wet floorboards, and he had a feeling he’d loose his footing on the third or fourth slip. A sailor exclaimed something he couldn’t quite hear. The sailor yelled again, but this time he heard it loud and clear: “We sprung a leak, Captain!”

The storm subsided and my laptop managed to reboot without problems. Earlier, I had been experimenting with linked lists and binary trees in C, using malloc() and pointers to link and access blocks of memory. I was fully aware of the risks of using such a low-level language: C is infamous for undefined behaviour and memory leaks. If the programmer isn’t careful, he will cause his program to crash spectacularly due to his code prompting the compiler to do some funny business. I knew you had to manually perform garbage collection in C, but how you did it and why wasn’t apparent to me when I first delved into dynamic memory allocation. And caused my laptop to run out of RAM in the process!

In most cases, the novice programmer does not need to concern himself with computer memory, for he will mostly be working with arrays and variables which only implement memory on the stack. Dynamic memory, on the other hand, implements memory on the heap. The difference between the two are significant: memory on the stack is closely linked with the CPU and is managed like clockwork, assigning memory where needed and freeing it once it is not needed, both processes done automatically; memory on the heap is more hands-on, in the sense that the programmer has to allocate the memory, keep track of the amount of memory used and free it by himself. Memory on the stack is fixed and remains as such throughout its lifetime in the program; memory on the heap can be expanded and shrunk where necessary. One would be forgiven that dynamic memory on the heap is the way to go when you don’t know how many elements (for an array or structure, for instance) you will be presented with beforehand. The number of elements can range from none to a million. Tempted as the programmer may be, he must understand the pros and cons of each memory implementation before proceeding with his code.

Unfortunately, I held no such precautions. I was merely interested in finding out how dynamic memory could be used to my advantage, disregarding the responsibility that came along with it. I attempted to recursively create nodes for a binary tree, and the C program that I wrote did include memory allocation, but did not include deallocation of that memory with free(). What’s worse, I failed to ensure that the recursions had proper exit points. Big mistake. My program (unsurprisingly) crashed with each recompilation. But unbeknownst to me, the allocated memory was slowly taking up my RAM. The compiled program was probably riddled with undefined behaviour, happily gobbling up any memory it could get its grubby hands on. Chomp, chomp, chomp…

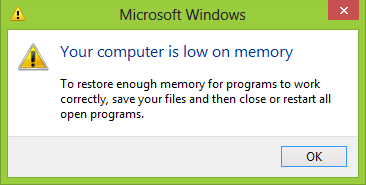

Then, this happened:

Ah great. Which brings us back to the present, now that my computer is up and running again after a quick reboot. I am writing this postmortem to establish what went wrong and highlight the steps that can be taken to prevent future occurances of this incident. I am guessing I won’t be the first, nor the last programmer to commit this mistake. But hey, that’s what you get when most good programmers are self-taught anyway.

Alright, on with my analysis. First of all, I hadn’t realized how intricately linked a computer was to its memory. Just thinking that I could waste my precious RAM, only 4GB in size (2G on average taken up by Windows processes) is perhaps blasphemous to most software engineers, let alone computer scientists. Thankfully, Windows had a preventive measures to ensure that it had enough RAM to continue with its critical processes just in case one or more programs started to hog too much memory. Secondly, I should have double checked before I proceeded with the code and properly allocated and deallocated memory accordingly. For some reason, online programming tutorials on dynamic memory allocation don’t stress enough the importance of freeing memory and simply present to you how to allocate it. All the while being oblivious to the fact that it has to be deallocated afterwards to prevent memory leaks. Opps! Finally, perhaps I was acting under the impression that computer memory is abundant and that I could use it as I please. There’s a fine line between memory on the hard drive and memory within Random Access Memory. RAM doesn’t have to be big because it only stores data temporarily before it destroys it to allow new data to be input. That, however, does not give me the luxury to allocate memory dynamically thinking that it will magically clean itself up. That’s why manual garbage collection is important in programming languages like C and C++. While some languages like Java offer automatic garbage collection so that the programmer doesn’t have to worry about all of this, this feature does contribute to performance overhead.

Thankfully, I hadn’t written myself a virus. My main takeaway from this is that our computers are more fragile than we realize, heavily relying on code that was written and tested by developers who are concerned about performance and stability. As much as we marvel at how much our computers can do, the way they can multitask and run resource-intensive games, they are, at their core, just machines that perform input and output operations.